The arrival of WEB 2.0 brought dynamic content through the use of technologies such as Java, Flash and PHP.

Consequently it also widen the attack surface. Websites became prettier, more interactive, easier to update and also easier to attack!

The need for further functionality was, as it is often the case, at the cost of security.

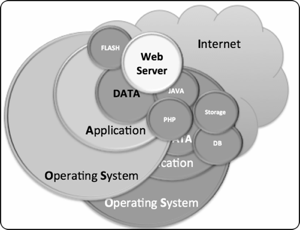

The four diagrams below illustrates the differences between a WEB 1.0 and a WEB 2.0 architecture as well as highlighting the increased attack surface.

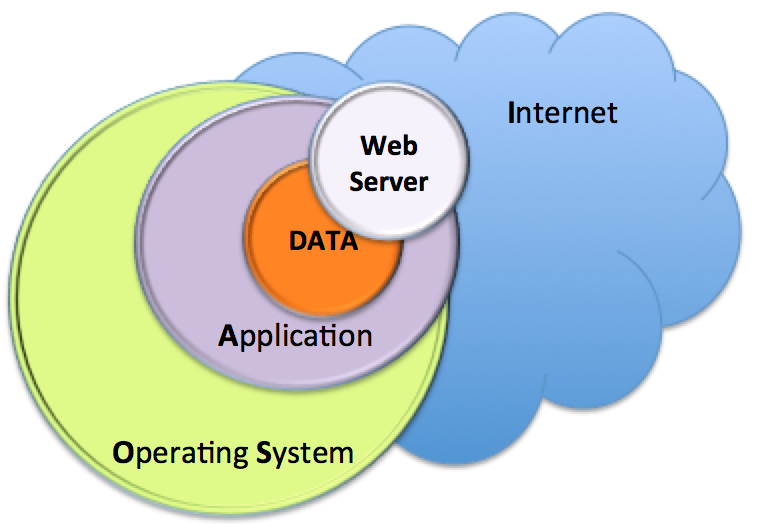

In a typical WEB 1.0 architecture, besides the physical, human and network security considerations, protecting the data is dependant of the Operating System and the application security layers. Typically, the application security layer is restricted to the Web Server (i.e.: Apache) if no other services/applications are exposed to the Internet.

Diagram 1 - WEB 1.0 Typical Architecture

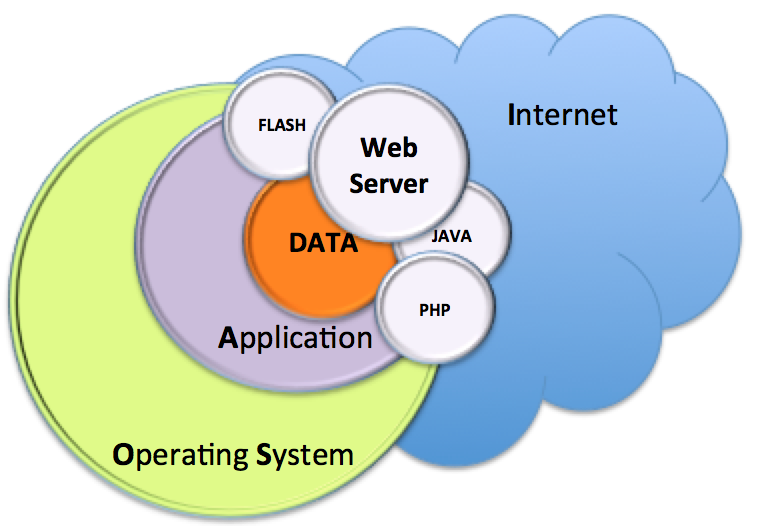

A WEB 2.0 Architecture introduces a number of extra applications which may be directly exposed to the Internet or through a Web Server. Those extra applications will provide the interface to dynamically display and manipulate the data.

Diagram 2 - WEB 2.0 Typical Architecture

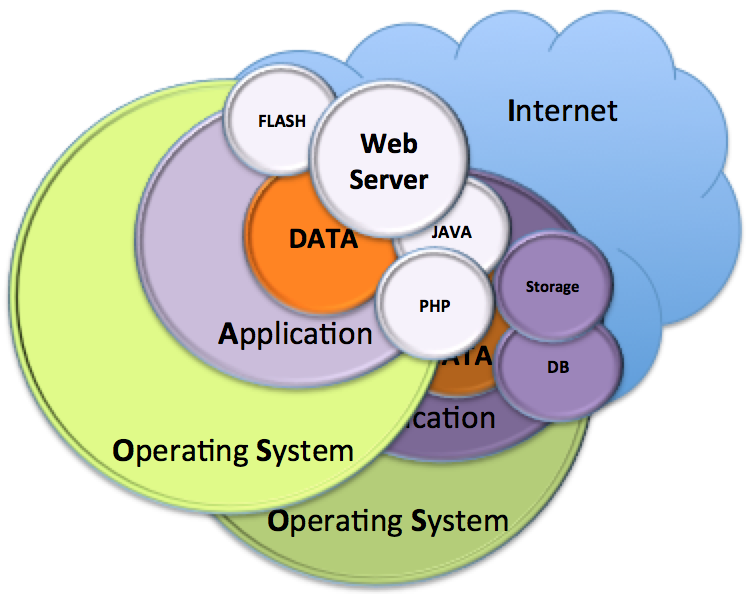

For larger and more complex WEB 2.0 websites, those applications may not all be located on one server and instead rely on remote/centralised storage locations as well as using more advanced data frameworks such as databases.

Diagram 3 - WEB 2.0 Extended Architecture

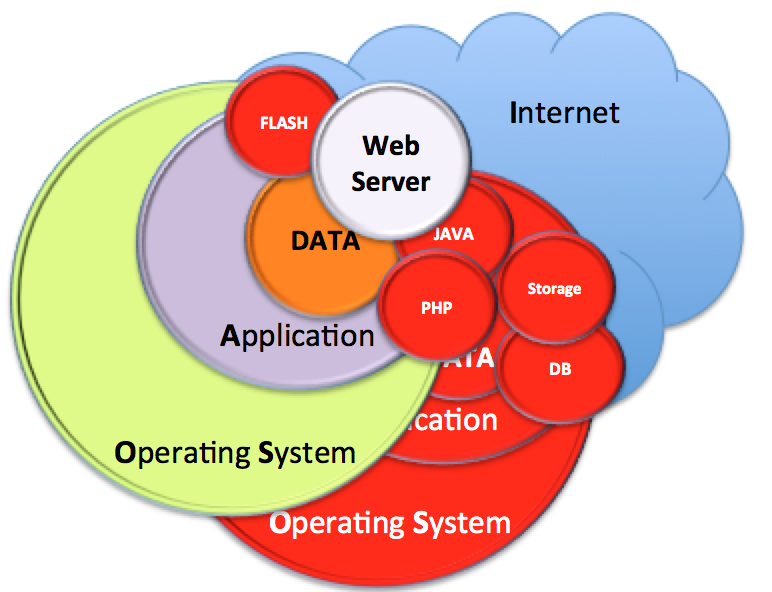

Those extra applications and servers/locations also introduce extra attack vectors as they increase the chances of misconfiguration, bugs, back doors, entry points, complexity, etc.

Diagram 4 - WEB 2.0 Increased Attack Vectors in Red

The problem is that not all websites need to generate dynamic content on demand, in fact, most websites could happily be static/WEB 1.0.

The reason why almost all current websites use technologies such as PHP or FLASH is because nearly all website creation frameworks now only offer WEB 2.0 backend.

Wordpress for example, is a very popular application that provides an easy and user friendly experience to create great looking websites with little IT knowledge. Yet, it is using PHP and is consistently peppered with security alerts and issues. The appeal for using Wordpress was originally for creating blogs: as new posts get added, the website is dynamically updated to display the newest articles first, archives the older ones, group the posts by categories, etc.

There are two issues with this type of solution:

1) As the website and its content grow, dynamically creating the content on demand requires an increasing amount of server resources. There are techniques to optimise such requests, but a dynamic website will always consume more resources than one only serving static webpages, this will eventually result in slow pages loading or time out errors.

2) Websites which main purpose have nothing to do with blogs still use such software package to dynamically generate what is in fact static content because those applications have become the default web creation packages provided by many web hosting providers. Does a website really need a dynamic blog platform to publish only sporadic news a few times a year?

From a security and performance point of view, it makes more sense to host a static website and reduce the number of applications being used to produce the webpages. That way, one only has to secure (or rely on a web host company) the Operating System and the web server application (singular, not plural).

A live example, of what can be done with a WEB 1.0 website in a WEB 2.0 world, is this very website.

It is entirely static as far as you, the visitors, are concerned. Yet, every time I publish a post on this blog the categories, pages index, RSS, etc. are all dynamically generated… once! Only once when the new post is published as opposed to eveytime a visitor access the page which is the case in your typical WEB 2.0 website.

To achieve this, I created a script which I run on my laptop when I want to publish a new post and dynamically update a local copy of this website with all the changes, then if I am happy with the content, it uploads the changes to the web server.

The trades off are that publishing a new post takes a few minutes as the whole website gets regenerated locally each time a new post needs to be published. I am also using a flat file structure which means it may not be appropriate for very large websites.

However, I believe the benefits are far greater: I always have a local backup of this website on my laptop which acts as the master copy, the performance for the visitors has improved dramatically and the attack surface has been reduced (I still rely on my web host provider to do their part) and finally, I have full control and knowledge of what is running on my website: It is pure HTML5/CSS (and a bit of Javascript, I couldn’t do without for some cosmetic elements). Finally, the outcome still looks like a modern website (I hope!) and it has many, if not all, the features of a PHP generated website but without the PHP, thus without the related security issues!

I will hopefuly get around to clean the code so I can eventually publish the script. I would however like to do a GUI and allow more visual customisation before making it public. If if this something you are interested in, feel free to contact me.

The key message, is that before creating/publishing a website, you should really consider if you actually need all the bloating of a dynamic WEB 2.0 framework and all the security issues that come with it! Try to move any power crunching/resource consuming/dynamic activities off line, far away from the claws of the Internet. You might solve your backup, security and performance issues in the process!

Time to go back to the source :)

RSS Feeds

RSS Feeds How to reduce WEB 2.0 attack surface by going back to WEB 1.0, the dynamic way!

How to reduce WEB 2.0 attack surface by going back to WEB 1.0, the dynamic way!